Intro #

It crossed my mind several times that I should start writing a blog where I can show interesting things I find, things I worked on, and write some guides to help others - and my future self as I may forget the things I did/found/fixed. I believe my last attempt was in 2017, when I made good progress on a completely custom design; then other priorities took over, so once more, it was left half-complete.

This time I was more focused on my goal: keep it simple, find a nice-looking theme, a reliable static site generator, and start writing.

What gave me a little push again, besides being on annual leave for a few days was that my new upgraded PC arrived so I could start setting it up. Although I primarily use Linux, I do enjoy playing games when I have the chance. Unfortunately, this doesn’t happen often enough, so I try to avoid spending too much time finding workarounds to make games run on Linux1. For years I had a setup where I dual boot Manjaro Linux and Windows. As the support for Windows 10 will end in October 2025, and my old PC is no longer supported, I decided to upgrade now, as prices might rise as we approach October. Previously, I tended to go for cheaper setups but this time I decided to go for something a bit higher in the price range so that I can play around with local large language models (LLMs) and other generative AI models.

I thought sharing my current setup could be interesting for those who take the time to read my new blog :) The main change to my setup is the new addition of local LLMs and other generative AI-based tools and plugins. Although in the last two years I exclusively worked on projects that include LLMs, at home I haven’t had a powerful enough setup (I had an old RTX 2060) to explore local LLMs, and I’m not a huge fan of spending money on cloud services. After doing some research, I decided to go with the most mainstream options that fit my needs. I’m not entirely sure just yet whether I made the right choice in all cases, but I have to start somewhere. As the post’s title suggests, this setup is my baseline that I will improve upon, and I’ll share my progress with you along the way.

For the rest of this post, I’ll provide a quick overview of each tool and why I chose them. In future posts, I will cover some of them in (more) detail.

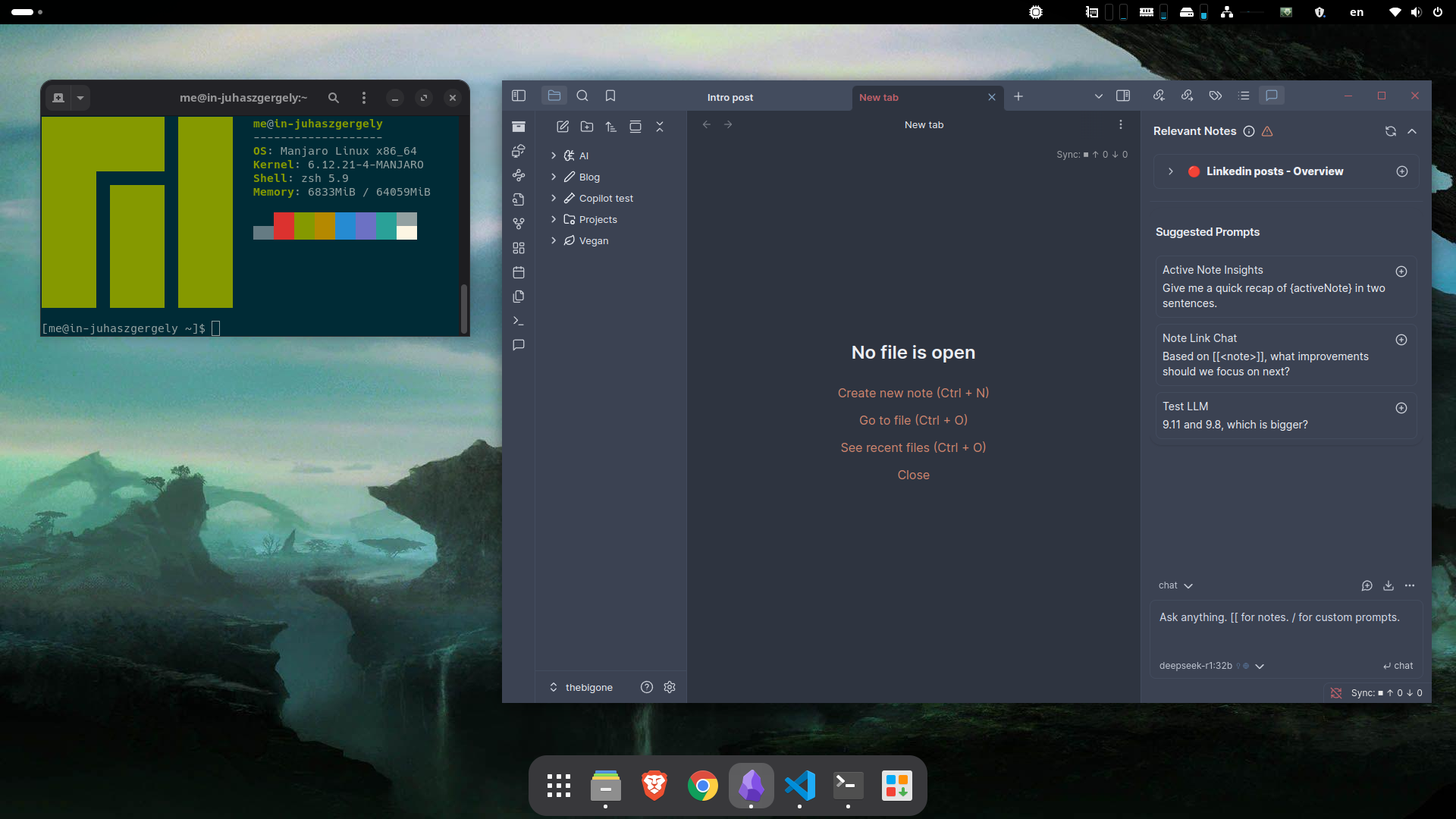

Manjaro #

Manjaro is a Linux distribution based on Arch Linux. Known for its user-friendly design, it offers several desktop environments, including GNOME, KDE Plasma, XFCE, and more. Manjaro is tailored for users who want a reliable operating system without spending hours troubleshooting dependency issues during updates or package installations.

When I first began using Linux around 2008, I started with Ubuntu, a well-known and beginner friendly distribution. I used it for nearly a decade, but it was prone to breaking during updates, and often lacked the lastest package versions (by design). The turning point came in 2017 when I bought a gaming laptop - which I still use when I’m travelling -, which had a dual GPU. While trying to make it work, I resonated with Linus’ opinion about NVIDIA at the time, but I won’t go into details about the issues to keep this short. After testing multiple distros, I found Manjaro, which worked great out of the box, and I’ve been hooked since.

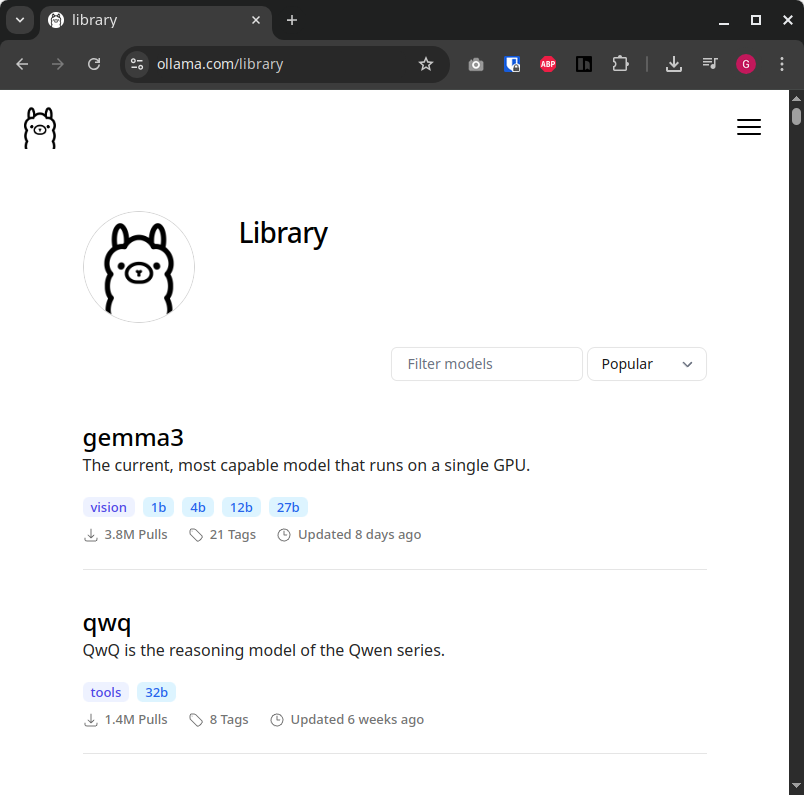

Ollama #

Ollama is a local AI model management tool. Designed to be user-friendly and accessible, it enables developers and enthusiasts to work with large language models (LLMs) more effectively. One of its standout features is the ability to manage multiple models efficiently, which is especially useful when experimenting with different LLMs. It integrates seamlessly with other tools (see a few examples below) and provides a great user experience for downloading and running models locally.

While running models locally has its pros and cons, this topic is certainly worthy of its own dedicated blog post.

Open WebUI #

Open WebUI offers a user interface in your browser for local models that mirrors ChatGPT, a game-changing feature that helped raise awareness about large language models (LLMs). Earlier, I mentioned Ollama, which only allows interaction with models via the terminal. In contrast, Open WebUI streamlines interaction with LLMs, offering an simple way to upload files, record your voice, have responses read aloud, keep track of chat history, and so on, while relying on Ollama for model management.

qwq's fun fact

Here's another mind-blowing octopus fact for you:Octopuses can fit through incredibly tiny spaces! Because their bodies are almost entirely soft (the only hard part is their beak), they can squeeze through openings as small as a coin! In one famous experiment, an octopus escaped an aquarium by squeezing through a gap in the tank’s lid and slithering into a drain pipe. They’re basically escape artists of the sea. 🐙✨

Bonus weirdness: Their arms have independent nervous systems—so even after an arm is detached, it can still “decide” to crawl or grab things! Creepy-cool, right?

gemma3:27b's fun fact

Here's a fun fact about octopuses:Octopuses have three hearts!

Two hearts pump blood through the gills, and the third circulates blood to the organs. Interestingly, the organ heart actually stops beating when the octopus swims, which is why they tend to crawl rather than swim for long distances - it’s exhausting!

Pretty wild, right? 😊

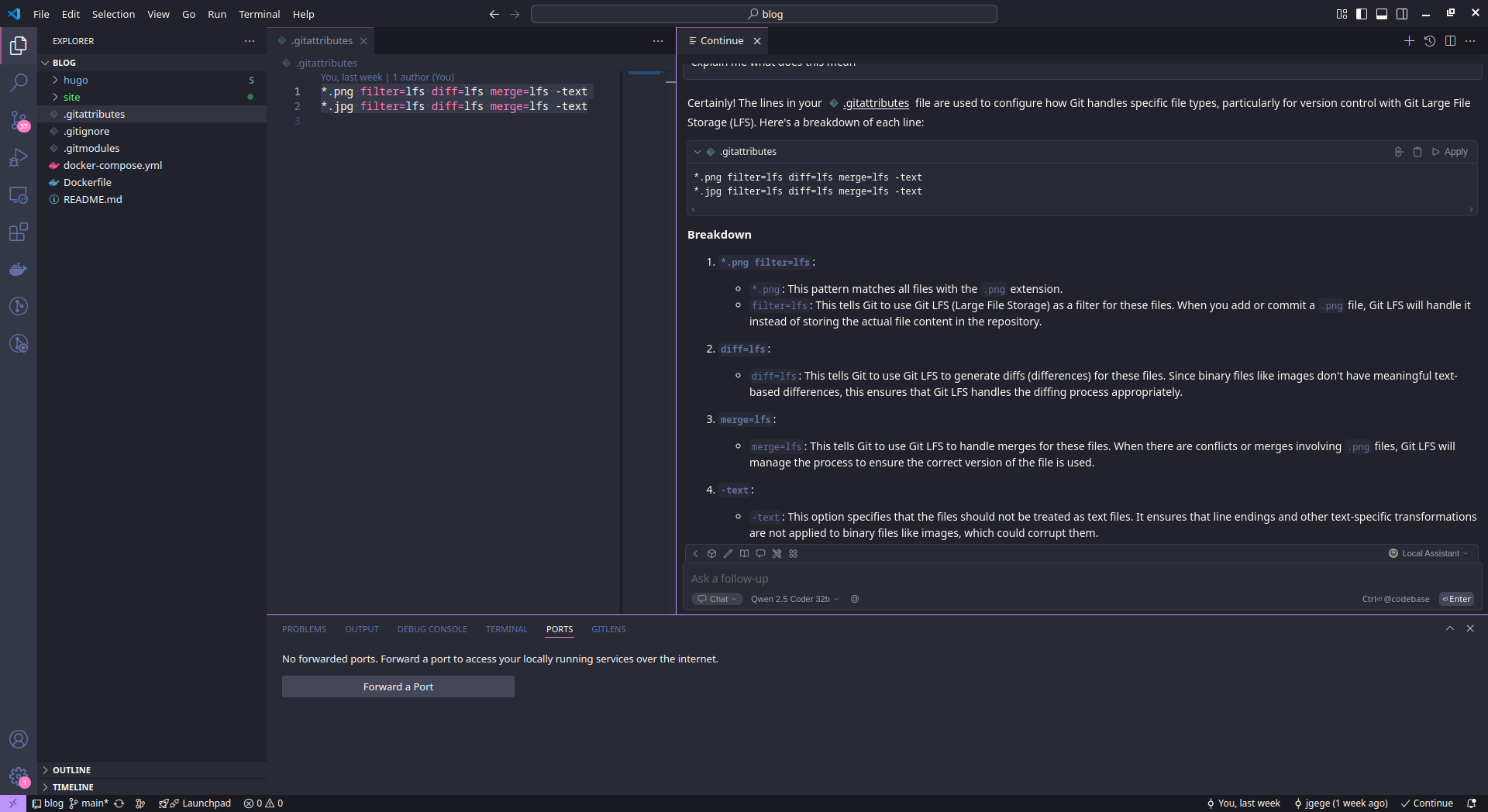

Visual studio code + Continue dev #

Several AI-powered code editors are based on Code OSS/VS Code, but many of those didn’t fit my needs. Rather than switching to a new editor, I decided to try Continue. This AI assistant for VS Code helps write code faster and more efficiently. It integrates seamlessly with Ollama, letting me use any model locally. Features include generating code snippets, writing documentation, explaining concepts, and brainstorming solutions etc. While it seems promising, I’m still exploring its full potential.

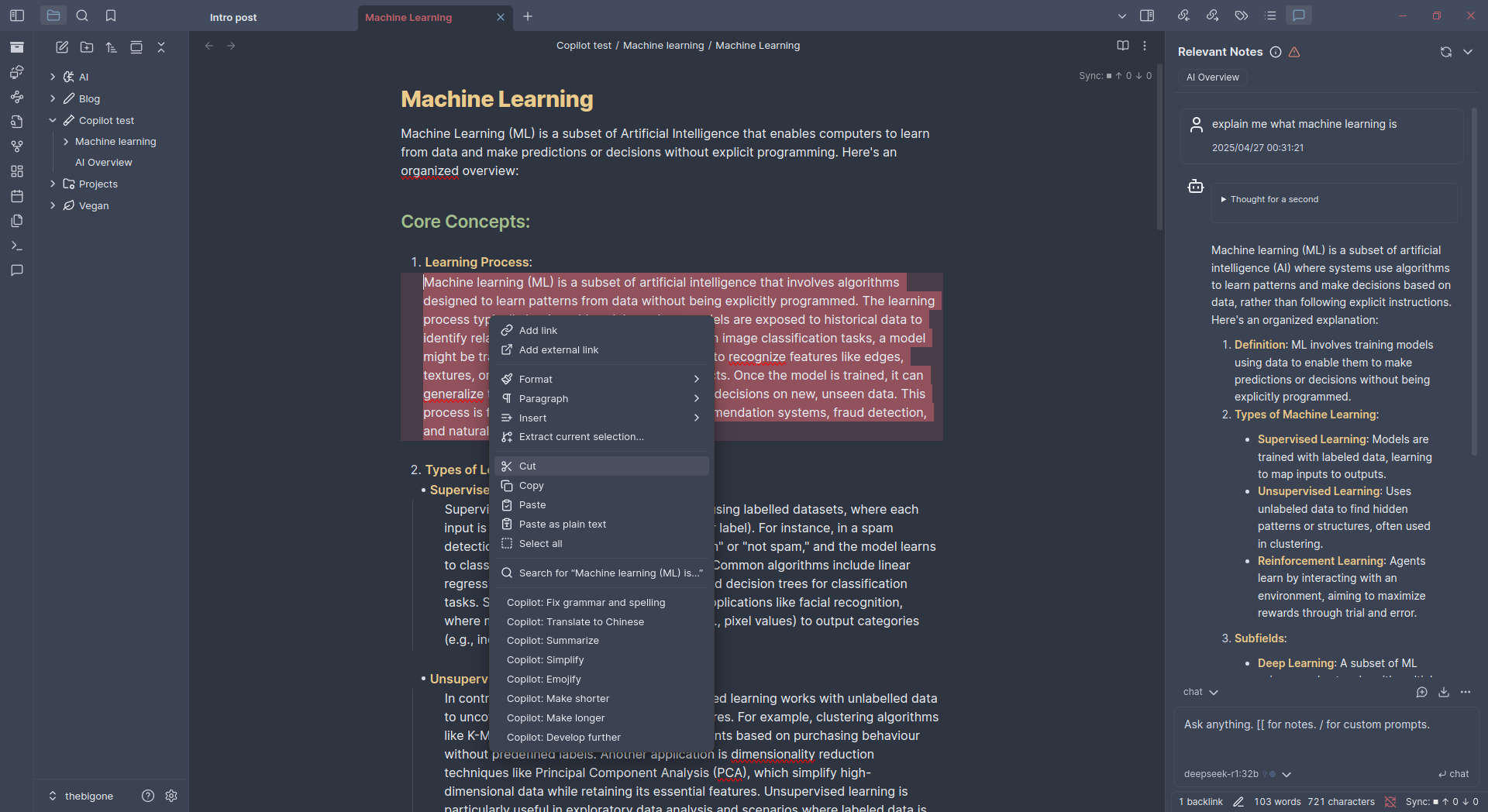

Obsidian + Copilot + Self-hosted live-sync #

Obsidian Copilot integrates LLM interaction into Obsidian. It can generate text, summarise notes, and helps to identify and correct grammatical errors, among other tasks. This tool is amazing for enhancing productivity in Obsidian and it contributed to my motivation to start this blog (there are alternatives, but you get the idea!).

Finally, Self-hosted live sync is a plugin that syncs your Obsidian vaults across devices without relying on third-party services. Being able to add notes instantly to my vault—whether on my PC or phone, anytime, anywhere—while retaining full control over my data and avoiding dependency on external providers is great. I’ll talk more about self-hosting in more depth in future posts!

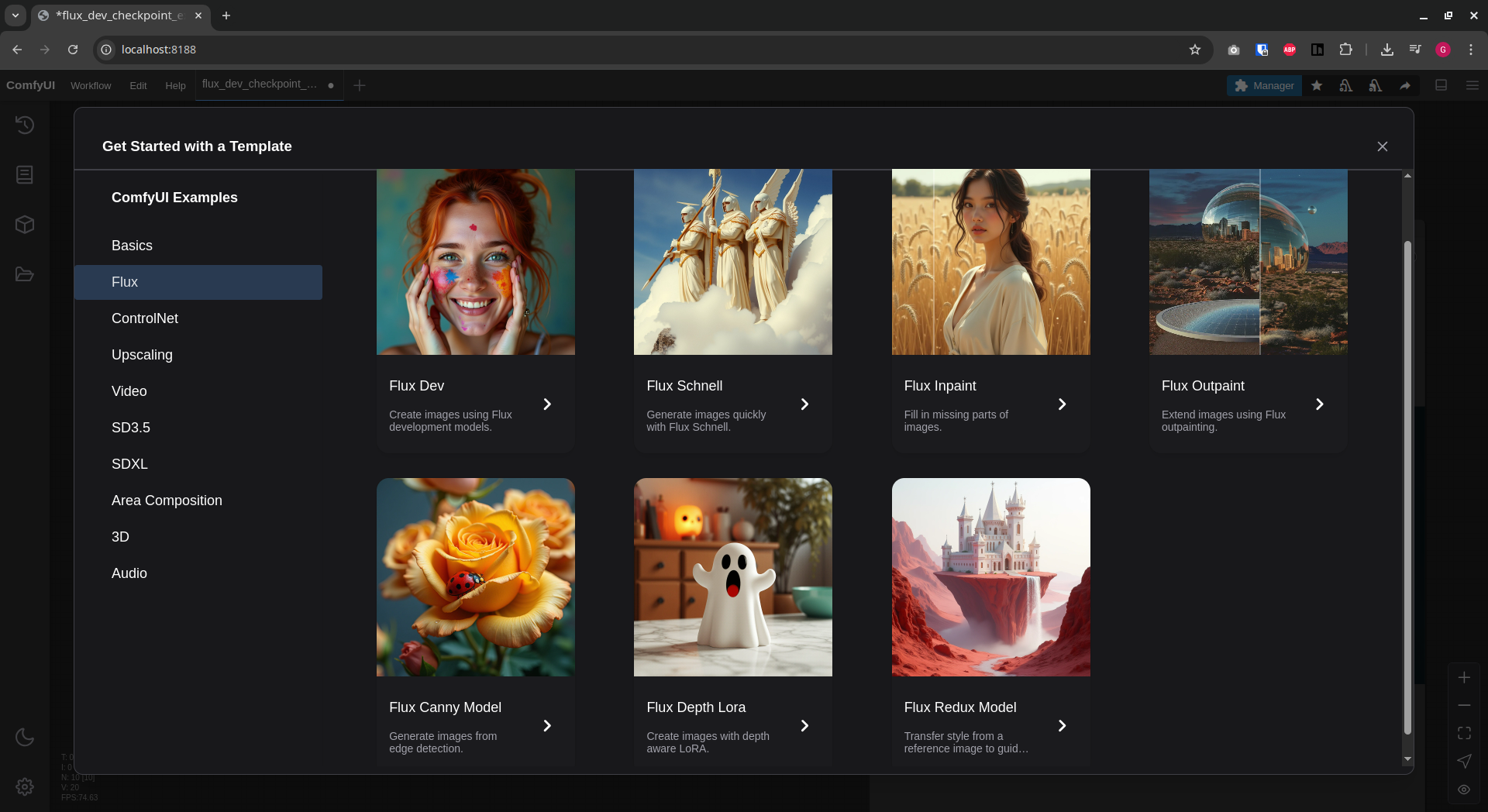

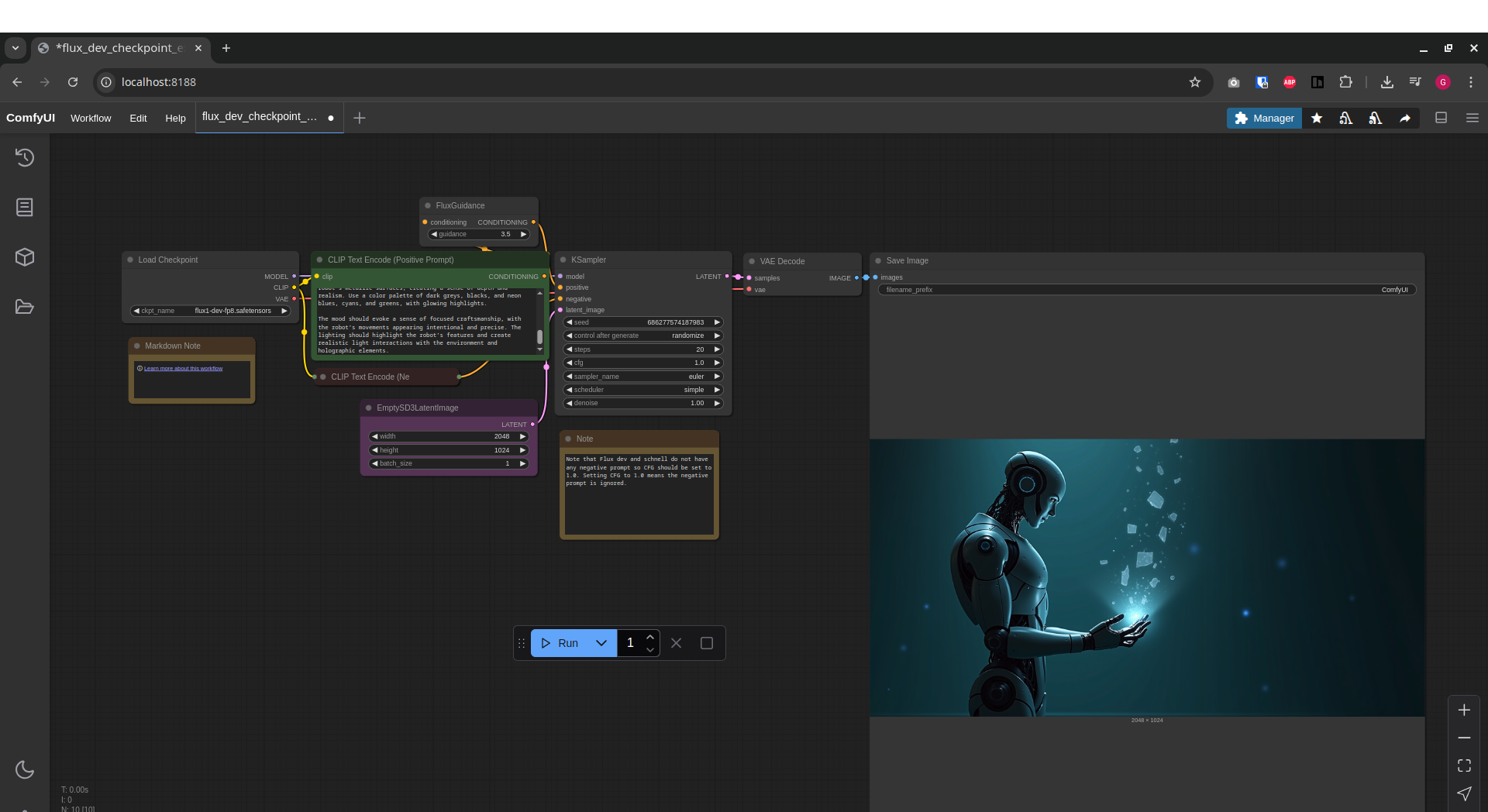

ComfyUI #

ComfyUI is a flexible and powerful web-based user interface designed to provide an intuitive experience for creating images and videos. It offers a wide range of customisation options and supports various workflows and models, making it suitable for both beginners and advanced users.

The cover image for this blog post was created2 using ComfyUI, with Flux.

Hugo + Blowfish #

Although unrelated to LLMs, I wanted to give credit to Hugo , the static site generator that powers this website, and Blowfish, the amazing and versatile theme I’m using. In the past, I tended to build static websites from scratch, but Hugo is so flexible that I haven’t come across any problem I couldn’t solve. It makes building simple websites quick and fun! One huge advantage of a static website compared to blog engines like WordPress is that it’s easy to host anywhere—for literally free and no stress over security updates or patches!

-

Most games should work on Linux, either natively supported or via emulation. However, I’ve encountered issues multiple times :( ↩︎

-

I tried to generate an image of a genderless robot, but the model didn’t quite get it. Either it made it obviously feminine (see the image) or masculine, the Spartan type. I guess either there is a heavy bias in the model, or I need to work a lot more on my prompting skills. ↩︎