Problem #

I wanted to try some new local LLMs, so while I was poking around, I decided to update both Ollama (to v0.14.2) and Open WebUI (to v0.7.2).

After the update, when I opened OWUI, none of the models were listed. I tested the connection from the UI to Ollama, and it confirmed that it could connect.

Ollama runs on my host machine, but OpenWebUI is running in a Docker container. In a previous version of OWUI, http://localhost:11434 worked fine, but it looks like one of the newer releases broke this.

Solution #

I searched online for similar problems, but nothing useful came up. Wanting a quick fix, I went straight to ChatGPT. It had a few useful suggestions, and the conclusion was that this version of OWUI probably has an Ollama instance running inside the container. I checked to confirm it:

root@b4ff5b12dc4c:/app/backend# ollama

Usage:

ollama [flags]

ollama [command]

Available Commands:

serve Start ollama

create Create a model

show Show information for a model

run Run a model

stop Stop a running model

pull Pull a model from a registry

push Push a model to a registry

signin Sign in to ollama.com

signout Sign out from ollama.com

list List models

ps List running models

cp Copy a model

rm Remove a model

help Help about any command

Flags:

-h, --help help for ollama

-v, --version Show version information

Use "ollama [command] --help" for more information about a command.

root@b4ff5b12dc4c:/app/backend# ollama -v

Warning: could not connect to a running Ollama instance

Warning: client version is 0.13.5

Worth mentioning that I’m running ghcr.io/open-webui/open-webui:ollama.

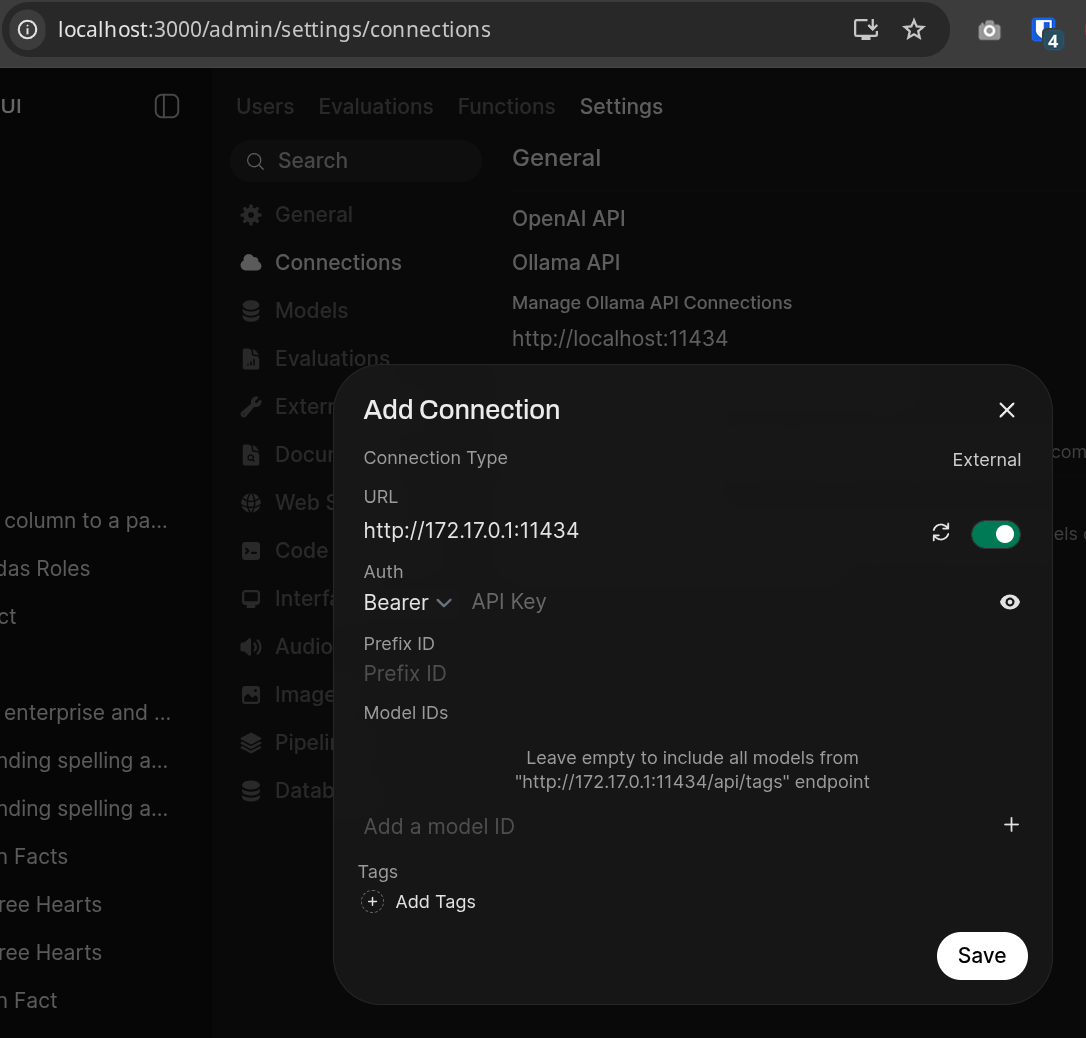

I’m not exactly sure what changed or when, but I had to update the configuration so that OWUI connects via the Docker bridge on 172.17.0.1. After that, everything worked fine.

Additional notes #

After fixing the issue, I tried asking the same questions to QWEN3-vl:32b. Surprisingly, it reached the right conclusion - so the solution itself was correct - but all the links and version numbers it mentioned were completely made up, see the full conversation here.

I then configured web search to give it access to more up-to-date information, hoping that would help, but it still didn’t quite get there, here’s my quick test.

As you can see, there are still some limitations when it comes to smaller models.